Speed Up Compound Full-Text Searches in PostgreSQL by 300x

Querying your database across associations can become a bottleneck in your Rails application. Julian Rubisch, our first guest author, explains why and suggests an efficient solution.

Full-text Search Approaches

Full-text search is a vital part of many web applications. Approaches to tackle this challenge are as diverse as the circumstances under which your app operates:

- If you have to combine documents from many sources, for example, you are likely going to reach for Elasticsearch. If you want a Rails integration the Searchkick gem has you covered.

- If you want a solution that’s similar to Elasticsearch’s capabilities but less devops-intensive, consider Meilisearch, which has a single-node architecture.

- If you want a fully managed, off-site solution, you could take a look at Algolia, for example.

- If you want to go full cutting edge, you could use a local LLM implementation to answer questions about documents in your application

Chances are, though, either of these solutions are breaking a fly on a wheel for your application. If you are using Postgresql as your database management system, the pg_search gem might be a perfect, low friction fit that doesn’t introduce additional operational complexity.

Note: Do not confuse this with the Postgres extension of the same name which is built and maintained by ParadeDB.

pg_search Primer

Let’s quickly recap the functionality of this gem. If you are already familiar with it, you might want to skip this section and continue reading below.

Generally, after installing the gem into your application bundle, you include the PgSearch::Model module in any model that you want to equip with full-text search capabilities.

Then, pg_search offers two distinct options for setting up search in your app:

- Multisearch: This allows to collect attributes from many models into one combined search index. This option is most suitable for apps that want to implement a global generic text search, like e-commerce sites.

You set this method up by executing the migrations to install the global search index:

$ bin/rails g pg_search:migration:multisearch

$ bin/rails db:migrate

Afterwards, you define what attributes you want to add to the index in your models using the multisearchable class method:

class Book < ApplicationRecord

include PgSearch::Model

multisearchable against: [:title, :author]

end

class Shoe < ApplicationRecord

include PgSearch::Model

multisearchable against: [:brand]

end

Once that is done, you can query the global search index like so:

PgSearch.multisearch("Shakespeare")

PgSearch.multisearch("Onitsuka Tiger")

- Search Scopes: Here, you specify one or more search scopes on a specific model. This allows for more fine-grained definition of search options, and is most suited for filtering a list of items of a certain category (as in faceted search, for example).

class Book < ApplicationRecord

include PgSearch::Model

# search by one attribute

pg_search_scope :search_by_author, against: :author

# search by many attributes

pg_search_scope :search_by_author_and_title, against: [:author, :title]

end

In a nutshell, this technique is a wrapper method to create an optimal SQL query to execute against your database tables. Note that there are many configuration options for full-text search in Postgresql that are beyond the scope of this article.

Using this approach, it is also up to you to create the necessary database indexes to speed up your search queries. The best way to go about this is to watch the development server logs, extract the relevant queries and create a database migration to add an index. In the example above, it might look like this:

class AddFullTextIndexes < ActiveRecord::Migration[7.2]

def up

add_index :books, "to_tsvector('english', author)", using: :gin

add_index :books, "(to_tsvector('english', coalesce(\"books\".\"author\"::text, '')) || to_tsvector('english', coalesce(\"books\".\"title"::text, '')))", using: :gin

end

end

Both indexes use the GIN index type, which stands for generalized inverted index. It is optimal for handling composite values such as documents (as in our case, text) because it contains a sepeparate entry for each component value. That’s why to_tsvector is used to first split the text into separate tokens (like words, for example).

In the first index, we are adding the index to this to_tsvector expression. In the second case, we first concatenate both author and title columns into one and handle it equivalently.

Search Across Associations

This works fine for singular model, but the moment you’d like to search across associations, you run into a problem. Because these search queries are constructed using JOINs across tables you cannot add database indexes for them, and are unable to speed them up.

Let’s look at an example. Assume that our Book model has a belongs_to association to Genre. We can implement a full text search that includes the genre name like this:

class Genre < ApplicationRecord

has_many :books

end

class Book < ApplicationRecord

include PgSearch::Model

belongs_to :genre

pg_search_scope :search,

against: [:author, :title],

associated_against: {genre: :name}

end

To illustrate the issue with search crossing table borders using JOIN, let’s look at the search query this produces:

pg-search-demo(dev)> Book.search("Shakespeare")

Book Load (3.7ms)

SELECT "books".*

FROM "books"

INNER JOIN (

SELECT

"books"."id" AS pg_search_id,

(ts_rank((to_tsvector('simple', coalesce(("books"."author")::text, '')) ||

to_tsvector('simple', coalesce(("books"."title")::text, '')) ||

to_tsvector('simple', coalesce(

(pg_search_e5aa4fdcd99c3ae4c52867.pg_search_45d3be0aa09508056c3caa)::text,

''))), (to_tsquery('simple', ''' ' || 'Shakespeare' || ' ''')),

0)) AS rank

FROM "books"

LEFT OUTER JOIN (

SELECT

"books"."id" AS id,

"genres"."name"::text AS pg_search_45d3be0aa09508056c3caa

FROM "books"

INNER JOIN "genres" ON "genres"."id" = "books"."genre_id") pg_search_e5aa4fdcd99c3ae4c52867

ON pg_search_e5aa4fdcd99c3ae4c52867.id = "books"."id"

WHERE (

(to_tsvector('simple', coalesce(("books"."author")::text, '')) ||

to_tsvector('simple', coalesce(("books"."title")::text, '')) || to_tsvector('simple',

coalesce((pg_search_e5aa4fdcd99c3ae4c52867.pg_search_45d3be0aa09508056c3caa)::text, ''))) @@

(to_tsquery('simple', ''' ' || 'Shakespeare' || ' ''')))) AS pg_search_6e317bcd6839e887739541

ON "books"."id" = pg_search_6e317bcd6839e887739541.pg_search_id

ORDER BY pg_search_6e317bcd6839e887739541.rank DESC, "books"."id" ASC

LIMIT 11

By all means, this is not a pleasant sight. But more importantly, as the WHERE clause is referring to a field in the LEFT OUTER JOIN which you cannot cover with a regular index.

Materialized View to the Rescue

What can we do to remedy this? In our case, we will denormalize the two tables into a Postgres materialized view. To manage our new view, connecting it to the application, we’ll employ the scenic gem:

$ bundle add scenic

Let’s walk through setting up a materialized view and using it for search purposes step by step:

1. Creating the View

We are going to call our view Books::Compound because we want its value to span multiple columns and tables referring to a book.

$ bin/rails g scenic:model Books::Compound --materialized

This will create a couple of things. First, a migration to create a materialized view:

class CreateBooksCompounds < ActiveRecord::Migration[8.0]

def change

create_view :books_compounds, materialized: true

end

end

Second, an empty db/views/books_compounds_v01.sql file for you to define the view in. Let’s do this right now:

SELECT

books.id AS id,

coalesce((books.author)::text, '') || ' ' ||

coalesce((books.title)::text, '') || ' ' ||

coalesce((genres.name)::text, '') AS book_search

FROM books

INNER JOIN genres

ON genres.id = books.genre_id

In a nutshell, this query selects two fields into our compound view: The respective book’s id, and a computed book_search one consisting of the book’s author and title, as well as the genre’s name columns.

Don’t forget to run the migration:

$ bin/rails db:migrate

Finally, it creates a Books::Compound model backed by the new books_compounds view, and prepopulated with two convenience methods:

# app/models/books/compound.rb

class Books::Compound < ApplicationRecord

def self.refresh

Scenic.database.refresh_materialized_view(table_name, concurrently: false, cascade: false)

end

def self.populated?

Scenic.database.populated?(table_name)

end

end

To initially populate the view, call Books::Compound.refresh in the Rails console. Given a Genre record with the name “Drama”, and a Book titled “Julius Caesar” by “William Shakespeare”, this is what our database will look like:

pg_search_demo_development=# SELECT id, name FROM genres;

id | name

----+-------

1 | Drama

(1 row)

pg_search_demo_development=# SELECT id, title, author FROM books;

id | title | author

----+---------------+---------------------

1 | Julius Caesar | William Shakespeare

(1 row)

pg_search_demo_development=# SELECT id, book_search FROM books_compounds;

id | book_search

----+-----------------------------------------

1 | William Shakespeare Julius Caesar Drama

(1 row)

2. Search

Maybe you ask yourself what we’ve won by this. That will become clear in a second, because now we can apply a pg_search_scope directly to the view-based model:

class Books::Compound < ApplicationRecord

+ include PgSearch::Model

+ # cannot be inferred

+ self.primary_key = :id

+ pg_search_scope :search,

+ against: :book_search

def self.refresh

Scenic.database.refresh_materialized_view(table_name, concurrently: false, cascade: false)

end

def self.populated?

Scenic.database.populated?(table_name)

end

end

The only thing we have to be careful of is to directly specify the model’s primary_key, because it cannot be inferred from the schema. Now we can search for compounds like so:

compounds = Books::Compound.search("Drama Shakespeare")

Books::Compound Load (4.6ms)

SELECT "books_compounds".*

FROM "books_compounds"

INNER JOIN (

SELECT

"books_compounds"."id" AS pg_search_id,

(ts_rank((to_tsvector('simple', coalesce(("books_compounds"."book_search")::text, ''))), (to_tsquery('simple', ''' ' || 'Drama' || ' ''') && to_tsquery('simple', ''' ' || 'Shakespeare' || ' ''')), 0)) AS rank

FROM "books_compounds"

WHERE ((to_tsvector('simple', coalesce(("books_compounds"."book_search")::text, ''))) @@ (to_tsquery('simple', ''' ' || 'Drama' || ' ''') && to_tsquery('simple', ''' ' || 'Shakespeare' || ' ''')))) AS pg_search_ceb49107c86be30669f91b

ON "books_compounds"."id" = pg_search_ceb49107c86be30669f91b.pg_search_id /* loading for pp */

ORDER BY pg_search_ceb49107c86be30669f91b.rank DESC, "books_compounds"."id" ASC LIMIT 11 /*application='PgSearchDemo'*/

-- => [#<Books::Compound:0x000000011fba2aa0 id: 1, book_search: "William Shakespeare Julius Caesar Drama">]

>

This is already a tremendous optimization because it avoids the costly LEFT OUTER JOIN operation. We can even go further, though, by adding an index to the materialized view:

class AddIndexToBooksCompounds < ActiveRecord::Migration[8.0]

disable_ddl_transaction!

def change

add_index :books_compounds,

"(to_tsvector('simple', coalesce((book_search, '')))",

using: :gin,

name: "books_compounds_book_search",

algorithm: :concurrently

end

end

When we use this search scope, we get a collection of Books::Compound, not books. To achieve this, we merely have to employ it as a subquery:

> Book.where(id: Books::Compound.search("Drama Shakespeare"))

Let’s compare the query plan of the original implementation against the compound version by running explain on each. To approach a real-world scenario, I’ve created 10_000 books using the Faker gem.

First, let’s use the original search scope:

> Book.search("Fanfiction Carol").explain(:analyze)

Sort (cost=735.96..736.08 rows=50 width=71) (actual time=37.394..37.395 rows=2 loops=1)

Sort Key: (ts_rank(((to_tsvector('simple'::regconfig, COALESCE((books_1.author)::text, ''::text)) || to_tsvector('simple'::regconfig, COALESCE((books_1.title)::text, ''::text))) || to_tsvector('simple'::regconfig, COALESCE((genres.name)::text, ''::text))), '''fanfiction'' & ''carol'''::tsquery, 0)) DESC, books.id

Sort Method: quicksort Memory: 25kB

-> Nested Loop (cost=391.11..734.54 rows=50 width=71) (actual time=18.885..37.389 rows=2 loops=1)

-> Hash Right Join (cost=390.82..678.20 rows=50 width=75) (actual time=18.875..37.374 rows=2 loops=1)

Hash Cond: (books_2.id = books_1.id)

Filter: (((to_tsvector('simple'::regconfig, COALESCE((books_1.author)::text, ''::text)) || to_tsvector('simple'::regconfig, COALESCE((books_1.title)::text, ''::text))) || to_tsvector('simple'::regconfig, COALESCE((genres.name)::text, ''::text))) @@ '''fanfiction'' & ''carol'''::tsquery)

Rows Removed by Filter: 9998

-> Hash Join (cost=31.83..292.19 rows=10000 width=40) (actual time=0.017..1.571 rows=10000 loops=1)

Hash Cond: (books_2.genre_id = genres.id)

-> Seq Scan on books books_2 (cost=0.00..234.00 rows=10000 width=16) (actual time=0.002..0.361 rows=10000 loops=1)

-> Hash (cost=19.70..19.70 rows=970 width=40) (actual time=0.008..0.008 rows=10 loops=1)

Buckets: 1024 Batches: 1 Memory Usage: 9kB

-> Seq Scan on genres (cost=0.00..19.70 rows=970 width=40) (actual time=0.004..0.004 rows=10 loops=1)

-> Hash (cost=234.00..234.00 rows=10000 width=43) (actual time=2.475..2.475 rows=10000 loops=1)

Buckets: 16384 Batches: 1 Memory Usage: 908kB

-> Seq Scan on books books_1 (cost=0.00..234.00 rows=10000 width=43) (actual time=0.005..0.760 rows=10000 loops=1)

-> Index Scan using books_pkey on books (cost=0.29..0.37 rows=1 width=67) (actual time=0.003..0.003 rows=1 loops=2)

Index Cond: (id = books_1.id)

Planning Time: 0.334 ms

Execution Time: 37.445 ms

Now, let’s issue the same search on our materialized view:

> Book.where(id: Books::Compound.search("Fanfiction Carol")).explain(:analyze)

Nested Loop (cost=272.36..280.39 rows=1 width=67) (actual time=2.219..2.227 rows=2 loops=1)

-> HashAggregate (cost=272.07..272.08 rows=1 width=8) (actual time=2.200..2.203 rows=2 loops=1)

Group Key: books_compounds.id

Batches: 1 Memory Usage: 24kB

-> Sort (cost=272.05..272.06 rows=1 width=12) (actual time=2.192..2.195 rows=2 loops=1)

Sort Key: (ts_rank(to_tsvector('simple'::regconfig, COALESCE(books_compounds_1.book_search, ''::text)), '''fanfiction'' & ''carol'''::tsquery, 0)) DESC, books_compounds.id

Sort Method: quicksort Memory: 25kB

-> Hash Join (cost=24.28..272.04 rows=1 width=12) (actual time=1.113..2.185 rows=2 loops=1)

Hash Cond: (books_compounds.id = books_compounds_1.id)

-> Seq Scan on books_compounds (cost=0.00..210.00 rows=10000 width=8) (actual time=0.005..0.838 rows=10000 loops=1)

-> Hash (cost=24.27..24.27 rows=1 width=57) (actual time=0.354..0.355 rows=2 loops=1)

Buckets: 1024 Batches: 1 Memory Usage: 9kB

-> Bitmap Heap Scan on books_compounds books_compounds_1 (cost=20.01..24.27 rows=1 width=57) (actual time=0.341..0.343 rows=2 loops=1)

Recheck Cond: (to_tsvector('simple'::regconfig, COALESCE(book_search, ''::text)) @@ '''fanfiction'' & ''carol'''::tsquery)

Heap Blocks: exact=2

-> Bitmap Index Scan on books_compounds_book_search (cost=0.00..20.01 rows=1 width=0) (actual time=0.335..0.335 rows=2 loops=1)

Index Cond: (to_tsvector('simple'::regconfig, COALESCE(book_search, ''::text)) @@ '''fanfiction'' & ''carol'''::tsquery)

-> Index Scan using books_pkey on books (cost=0.29..8.30 rows=1 width=67) (actual time=0.008..0.009 rows=1 loops=2)

Index Cond: (id = books_compounds.id)

Planning Time: 0.984 ms

Execution Time: 2.302 ms

Observe that the version leveraging the materialized view is about 18.5 times faster in this simple case!

3. Keeping It Up To Date

Of course, there’s also a downside to this approach. Regular database views don’t need updating because they merely encapsulate a specific SELECT. In other words, this stored query is run every time the view is called, hence there’s no risk of returning stale data.

Materialized views, though, need a trigger to update when their underlying data changes.

In a first step, let’s add a model callback to achieve this:

class Book < ApplicationRecord

include PgSearch::Model

belongs_to :genre

pg_search_scope :search,

against: [:author, :title],

associated_against: {genre: :name}

+ after_save :refresh_compound

+ def refresh_compound

+ Books::Compound.refresh

+ end

end

Now, whenever a book record is updated, so will the compound view. An issue though is that we need to add the same functionality to Genre. To reduce duplication, let’s add a concern for this:

# app/models/concerns/book_compound_ingredient.rb

module BookCompoundIngredient

extend ActiveSupport::Concern

included do

after_save :refresh_compound

end

def refresh_compound

Books::Compound.refresh

end

end

class Book < ApplicationRecord

include PgSearch::Model

+ include BookCompoundIngredient

belongs_to :genre

pg_search_scope :search,

against: [:author, :title],

associated_against: {genre: :name}

end

class Genre < ApplicationRecord

+ include BookCompoundIngredient

has_many :books

end

This looks great! There’s one final concern (pun intended) with this architecture though: As the view grows larger, refreshing may take a long time. The best idea, thus, would be to move this to a job:

$ bin/rails g job RefreshBooksCompound

# app/jobs/refresh_books_compound_job.rb

class RefreshBooksCompoundJob < ApplicationJob

queue_as :default

def perform

Books::Compound.refresh

end

end

In the after_save callback, we now simply call it:

module BookCompoundIngredient

extend ActiveSupport::Concern

included do

after_save :refresh_compound

end

def refresh_compound

- Books::Compound.refresh

+ RefreshBooksCompoundJob.perform_later

end

end

4. Bonus: Concurrent Refreshes

There’s still an optimization we can take into account. The default mode of performing these refreshes is non-concurrent, locking the whole view for selects while updating the underlying data. Clearly this is not ideal - just imagine what would happen if you have some a more complex architecture spanning multiple associations. A non-concurrent refresh can easily lock your view for seconds.

Locking can be avoided though by using the concurrent mode. The only requirement is that you need to add a unique index covering all rows to the view. Since we are mirroring the book id into the view, this is quite simple:

$ bin/rails g migration AddUniqueIndexToBooksCompounds

class AddUniqueIndexToBooksCompounds < ActiveRecord::Migration[8.0]

disable_ddl_transaction!

def change

add_index :books_compounds, :id, unique: true, algorithm: :concurrently

end

end

Now we can switch on concurrent mode in our Books::Compound model:

class Books::Compound < ApplicationRecord

include PgSearch::Model

# cannot be inferred

self.primary_key = :id

pg_search_scope :search,

against: :book_search

def self.refresh

- Scenic.database.refresh_materialized_view(table_name, concurrently: false, cascade: false)

+ Scenic.database.refresh_materialized_view(table_name, concurrently: true, cascade: false)

end

def self.populated?

Scenic.database.populated?(table_name)

end

end

For completeness sake, note that the cascade option will respect materialized views that depend on other materialized views. In that case, the cascade will refresh dependencies first, then the views that depend on them.

But adding this index isn’t only necessary for enabling concurrent refreshes, it also resulted in yet another speedup regarding our full-text search:

> Book.where(id: Books::Compound.search("Fanfiction Carol")).explain(:analyze)

Nested Loop (cost=29.14..37.17 rows=1 width=67) (actual time=0.079..0.083 rows=2 loops=1)

-> HashAggregate (cost=28.85..28.86 rows=1 width=8) (actual time=0.068..0.069 rows=2 loops=1)

Group Key: books_compounds.id

Batches: 1 Memory Usage: 24kB

-> Sort (cost=28.84..28.84 rows=1 width=12) (actual time=0.064..0.064 rows=2 loops=1)

Sort Key: (ts_rank(to_tsvector('simple'::regconfig, COALESCE(books_compounds_1.book_search, ''::text)), '''fanfiction'' & ''carol'''::tsquery, 0)) DESC, books_compounds.id

Sort Method: quicksort Memory: 25kB

-> Nested Loop (cost=20.29..28.83 rows=1 width=12) (actual time=0.045..0.054 rows=2 loops=1)

-> Bitmap Heap Scan on books_compounds books_compounds_1 (cost=20.01..24.27 rows=1 width=57) (actual time=0.028..0.030 rows=2 loops=1)

Recheck Cond: (to_tsvector('simple'::regconfig, COALESCE(book_search, ''::text)) @@ '''fanfiction'' & ''carol'''::tsquery)

Heap Blocks: exact=2

-> Bitmap Index Scan on books_compounds_book_search (cost=0.00..20.01 rows=1 width=0) (actual time=0.025..0.025 rows=2 loops=1)

Index Cond: (to_tsvector('simple'::regconfig, COALESCE(book_search, ''::text)) @@ '''fanfiction'' & ''carol'''::tsquery)

-> Index Only Scan using index_books_compounds_on_id on books_compounds (cost=0.29..4.30 rows=1 width=8) (actual time=0.003..0.003 rows=1 loops=2)

Index Cond: (id = books_compounds_1.id)

Heap Fetches: 0

-> Index Scan using books_pkey on books (cost=0.29..8.30 rows=1 width=67) (actual time=0.006..0.006 rows=1 loops=2)

Index Cond: (id = books_compounds.id)

Planning Time: 0.420 ms

Execution Time: 0.123 ms

Looking at the query plan it becomes clear that by adding an index on the primary key with a unique constraint - which would have been added by Rails by default if it were a regular table - the query planner is able to perform an Index Only Scan against the index_books_compounds_on_id.

After this optimization, the plan now reports an execution time that is 300 times faster than the original search query! Not only that, this design is also more scalable over time, keeping performance comparable even as the data rows and query volume for the materialized view grow.

Round Up

In this article, we’ve looked at a popular approach to add full-text search to a Postgresql backed Ruby on Rails model. Using the pg_search gem, you can transparently add class methods for search like with a regular scope.

However, when you want to extend this functionality across one or more associations, you will experience serious performance implications.

This is where materialized views can help by providing a denormalized, fixed representation of the underlying data that can be optimized by regular GIN indexes.

Indeed, our experiment has shown that we can improve lookup times by two orders of magnitude using this technique.

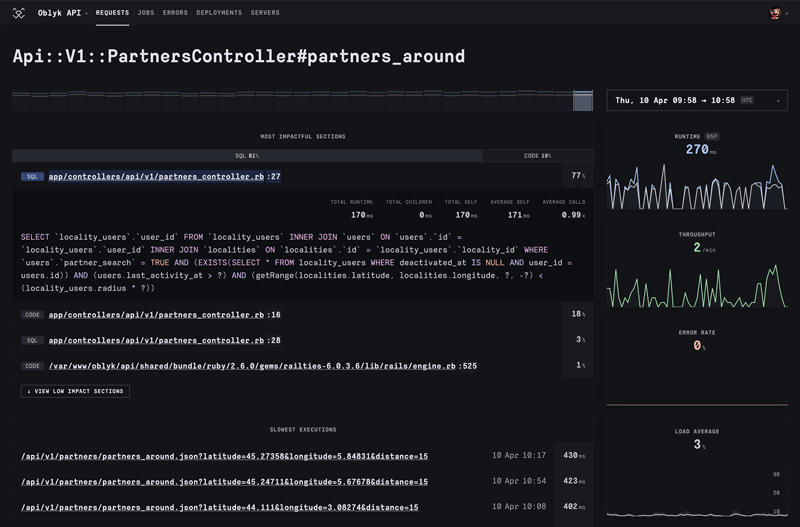

Keep in mind, though, that materialized views bring about an additional maintenance burden because they have to be kept in sync with the underlying data. In essence, we have traded an optimization of the read path by introducing more write operations to the database. However, depending on the size of your database, this tradeoff may be worth the effort. You might want to set up performance monitoring to keep an eye on refresh times, row counts etc. If the volume of refreshes becomes too high, you might want to move from triggering them in a model callback to a recurring job (e.g. every 5 minutes).

Editor’s note: If you feel like digging deeper into PostgreSQL optimizations, we can’t recommend enough the excellent High-Performance PostgreSQL for Rails book by Andrew Atkinson.